Our global produces an enormous quantity of information day-to-day, so any software we will be able to get that may make records processing much less painful is a welcome reduction. Thankfully, numerous records processing gear and products and services are to be had.

So as of late, we’re specializing in Amazon Kinesis. We will be able to discover what Amazon Kinesis is and its makes use of, limits, advantages, and lines. We will be able to additionally have a look at Amazon Kinesis records streams, give an explanation for kinesis records analytics, and examine Kinesis with different assets akin to SQS, SNS, and Kafka.

So then, what’s Amazon Kinesis anyway?

What’s Amazon Kinesis?

Amazon Kinesis is a chain of controlled, cloud-based products and services devoted to gathering and processing streaming records in real-time. To cite the AWS kinesis webpage, “Amazon Kinesis makes it simple to assemble, procedure, and analyze real-time, streaming records so you’ll be able to get well timed insights and react briefly to new data. Amazon Kinesis gives key features to cost-effectively procedure streaming records at any scale, together with the versatility to select the gear that perfect go well with the necessities of your utility. With Amazon Kinesis, you’ll be able to ingest real-time records akin to video, audio, utility logs, web page clickstreams, and IoT telemetry records for system finding out, analytics, and different packages. Amazon Kinesis allows you to procedure and analyze records because it arrives and reply right away as an alternative of getting to attend till all of your records is accumulated earlier than the processing can start.”

Amazon Kinesis is composed of 4 specialised products and services or features:

- Kinesis Knowledge Streams (KDS): Amazon Kinesis Knowledge Streams seize streaming records generated through more than a few records resources in real-time. Then, manufacturer packages write to the Kinesis Knowledge Circulation, and client packages attached to the circulation learn the knowledge for various kinds of processing.

- Kinesis Knowledge Firehose (KDF): This provider precludes the want to write packages or organize assets. Customers configure records manufacturers to ship records to the Kinesis Knowledge Firehose, which routinely delivers the knowledge to the required locations. Customers will also configure Kinesis Knowledge Firehose to switch the knowledge earlier than sending it.

- Kinesis Knowledge Analytics (KDA): This provider we could customers procedure and analyze streaming records. It supplies a scalable, environment friendly atmosphere that runs packages constructed the usage of the Apache Flink framework. As well as, the framework gives useful operators like combination, filter out, map, window, and so on., for querying streaming records.

- Kinesis Video Streams (KVS): This can be a absolutely controlled provider used to circulation reside media from audio or video seize units to the AWS Cloud. It may well additionally construct packages for real-time video processing and batch-oriented video analytics.

We will be able to read about each and every provider in additional element later.

What Are the Limits of Amazon Kinesis?

Despite the fact that the above description sounds spectacular, even Kinesis has barriers and restrictions. As an example:

- Circulation data will also be accessed as much as 24 hours through default and prolonged as much as seven days through enabling prolonged records retention.

- You’ll create, through default, as much as 50 records streams with the on-demand capability mode inside your Amazon Internet Products and services account. If you want a quota building up, you will have to touch AWS improve.

- The utmost dimension of the knowledge payload earlier than Base64-encoding (also referred to as a knowledge blob) in a single report is one megabyte (MB).

- You’ll transfer between on-demand and provisioned capability modes for each and every records circulation on your account two times inside 24 hours.

- One shard helps as much as 1000 PUT data in keeping with 2nd, and each and every shard helps as much as 5 learn transactions in keeping with 2nd.

How Do You Use Amazon Kinesis?

It’s simple to arrange Amazon Kinesis. Observe those steps:

- Arrange Kinesis

- Check in on your AWS account, then make a choice Amazon Kinesis within the Amazon Control Console.

- Click on at the Create circulation and fill in the entire required fields. Then, click on the “Create” button.

- You’ll now see the Circulation within the Circulation Record

- Arrange customers: This segment comes to the usage of Create New Customers and assigning insurance policies to each and every one.

- Attach Kinesis on your utility: Relying in your utility (Looker, Tableau Server, Domo, ZoomData), you’ll most probably want to refer on your Settings or Administrator home windows and apply the activates, generally beneath “Assets.”

What Are the Options of Amazon Kinesis?

Listed below are Amazon Kinesis’s maximum outstanding options:

- It’s cost-effective: Kinesis makes use of a pay-as-you-go type for the assets you want and fees hourly for the throughput required.

- It’s simple to make use of: You’ll briefly create new streams, set necessities, and get started streaming records.

- You’ll construct Kinesis packages: Builders get consumer libraries that allows them to design and function real-time records processing packages.

- It has safety: You’ll protected your records at-rest through the usage of server-side encryption and using AWS KMS grasp keys on any touchy records throughout the Kinesis Knowledge Streams. You’ll get admission to records privately thru your Amazon Digital Personal Cloud (VPC).

- It has elastic, prime throughput, and real-time processing: Kinesis lets in customers to assemble and analyze data in real-time as an alternative of looking forward to a data-out record.

- It integrates with different Amazon products and services: For instance, Kinesis integrates with Amazon DynamoDB, Amazon Redshift, and Amazon S3.

- It’s absolutely controlled: Kinesis is absolutely controlled, so it runs your streaming packages with out you having to control any infrastructure.

All About Kinesis Knowledge Streams (KDS)

Amazon Kinesis Knowledge Streams accumulate and retailer streaming records in real-time. Streaming records is accumulated from other resources through manufacturer packages from more than a few resources after which driven incessantly right into a Kinesis Knowledge Circulation. Additionally, the shopper packages can learn the knowledge from the KDS and procedure it in real-time.

Knowledge saved in Kinesis Knowledge Circulation closing 24 hours through default however will also be reconfigured for as much as one year.

As a part of their processing, client packages can save their effects the usage of different AWS products and services like DynamoDB, Redshift, or S3. As well as, those packages procedure the knowledge in genuine or close to real-time, making the Kinesis Knowledge Streams provider particularly treasured for growing time-sensitive packages akin to anomaly detection or real-time dashboards.

KDS could also be used for real-time records aggregation, adopted through loading the aggregated records into a knowledge warehouse or map-reduce cluster

Defining Streams, Shards, and Data

A circulation is made up of more than one records carriers referred to as shards. The information circulation’s overall capability is the sum of the capacities of the entire shards that make it up. Each and every shard supplies a suite capability price and includes a records report series. Knowledge saved within the shard is referred to as a report; each and every shard has a chain of information data. Each and every records report will get a series quantity assigned through the Kinesis Knowledge Circulation.

Making a Kinesis Knowledge Circulation

You’ll create a Kinesis records circulation from the AWS Command Line Interface (CLI) or the usage of the AWS Kinesis Knowledge Streams Control Console, the usage of the CreateStream operation of Kinesis Knowledge Streams API from the AWS SDK. You’ll additionally use AWS CloudFormation or AWS CDK to create a knowledge circulation as a part of an infrastructure-as-code challenge.

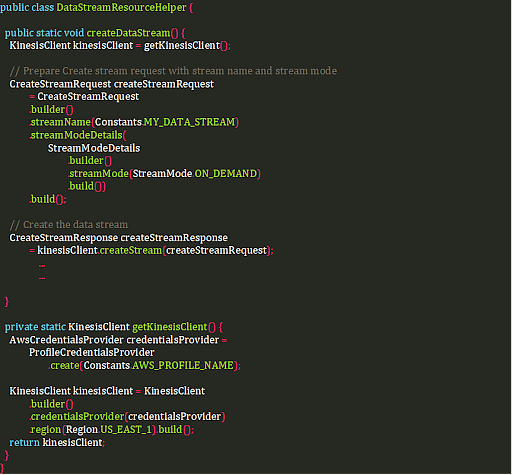

For illustrative functions, right here’s a code pattern. This pattern creates a knowledge circulation with the Kinesis Knowledge Streams API, together with an “on-demand” mode.

Kinesis Knowledge Circulation Data

Those data have:

- A series quantity: A singular identifier assigned to each and every report through the KDS.

- A partition key: This key segregates and routes data to other shards in a circulation.

- An information blob: The blob’s most dimension is 1 megabyte.

Knowledge Ingestion: Writing Knowledge to Kinesis Knowledge Streams

Packages that write records to the KDS streams are referred to as “manufacturers.” Customers can custom-build manufacturer packages in a supported programming language the usage of AWS SDK or the Kinesis Manufacturer Library (KPL). Customers too can use Kinesis Agent, a stand-alone utility that runs as an agent on Linux-based server environments like database servers, log servers, and internet servers.

Knowledge Intake: Studying Knowledge from Kinesis Knowledge Streams

This degree comes to growing a client utility that processes records from a knowledge circulation.

Customers of Kinesis Knowledge Streams

The Kinesis Knowledge Streams API is a low-level approach of studying streaming records. Customers will have to ballot the circulation, checkpoint processed data, run more than one circumstances, and do different duties the usage of the Kinesis Knowledge Streams API to behavior operations on a knowledge circulation. Because of this, it’s sensible to create a client utility to learn circulation records. Right here’s a pattern of imaginable packages:

- AWS Lambda

- Kinesis Consumer Library (KCL)

- Kinesis Knowledge Firehose

- Kinesis Knowledge Analytics

Throughput Limits: Shared vs. Enhanced Fan-Out Customers

Customers will have to take into account the Kinesis Knowledge Circulation’s throughput limits in designing and working an overly dependable records streaming gadget and making sure predictable efficiency.

Take into accout, the knowledge capability of a knowledge circulation is a serve as of the selection of shards within the circulation, and a shard helps 1 MB in keeping with 2nd and 1,000 data in keeping with 2nd for write throughput and a couple of MB in keeping with 2nd for learn throughput. When more than one shoppers learn from a shard, this learn throughput is shared among them. Those shoppers are referred to as “Shared fan-out shoppers.” On the other hand, if customers need devoted throughput for his or her shoppers, they are able to outline the latter as “Enhanced fan-out shoppers.”

The Kinesis Knowledge Firehose

The Kinesis Knowledge Firehose is a completely controlled provider and the very best approach of loading streaming records into records shops and analytics gear. The firehose captures, transforms, and quite a bit streaming records and lets in close to real-time analytics when the usage of current industry intelligence (BI) gear and dashboards.

Making a Kinesis Firehose Supply Circulation

You’ll create a Firehose supply circulation the usage of the AWS SDK, AWS control console, or with infrastructure as a provider akin to AWS CloudFormation and AWS CDK.

Transmitting Knowledge to a Kinesis Firehose Supply Circulation

You’ll ship records to a firehose from a number of other resources:

- Kinesis Knowledge Circulation

- Kinesis Firehose Agent

- Kinesis Knowledge Firehose API

- Amazon CloudWatch Logs

- CloudWatch Occasions

- AWS IoT as the knowledge supply

Explaining Knowledge Transformation in a Firehose Supply Circulation

Customers can configure the Kinesis Knowledge Firehose supply circulation, reworking and changing streaming records from the knowledge supply earlier than handing over remodeled records to its vacation spot by means of those two strategies:

- Reworking Incoming Knowledge

- Changing the Incoming Knowledge Data Structure.

Firehose Supply Circulation Knowledge Supply Structure

After the supply circulation receives the streaming records, it’s routinely brought to the configured vacation spot. Each and every vacation spot kind that the Kinesis Knowledge Firehose helps has its explicit configuration for records supply.

Explaining Kinesis Knowledge Analytics

Kinesis Knowledge Analytics is the most straightforward approach to analyze and procedure real-time streaming records. It feeds real-time dashboards, generates time-series analytics, and creates real-time notifications and indicators.

What’s The Distinction Between Amazon Kinesis Knowledge Streams and Kinesis Knowledge Analytics?

We use Kinesis Knowledge Streams to write down client packages with tradition code designed to accomplish any wanted streaming records processing. On the other hand, the ones packages generally run on server circumstances like EC2 in an infrastructure we provision and organize. Alternatively, Kinesis Knowledge Analytics supplies an routinely provisioned atmosphere designed to run packages which might be constructed with the Flink framework, which routinely scales to take care of any quantity of incoming records.

Additionally, Kinesis Knowledge Streams client packages typically write data to a vacation spot akin to an S3 bucket or a DynamoDB desk as soon as some processing is finished. Kinesis Knowledge Analytics, on the other hand, options packages that carry out queries like aggregations and filtering through making use of other home windows at the streaming records. This procedure identifies traits and patterns for real-time indicators and dashboard feeds.

Flink Utility Construction

Flink packages include:

- Execution Surroundings: This atmosphere is outlined in the primary utility elegance, and it is liable for growing the knowledge pipeline, which comprises the industry common sense and is composed of a number of operators chained in combination.

- Knowledge Supply: The applying consumes records through relating to a supply, and a supply connector reads records from the Kinesis records circulation, Amazon S3 bucket, or different such assets.

- Processing Operators: The applying processes records through using processing operators, reworking the enter records that originates from the knowledge resources. As soon as the transformation is whole, the appliance forwards the modified records to the knowledge sinks.

- Knowledge Sink: The applying generates records to exterior resources through using sinks. Sink connectors write records to a Kinesis Knowledge Firehose supply circulation, a Kinesis records circulation, an Amazon S3 bucket, or different such vacation spot.

Making a Flink Utility

Customers create Flink packages and run them the usage of the Kinesis Knowledge Analytics provider. Customers can create those packages in Java, Python, or Scala.

Configuring a Kinesis Knowledge Circulation as Each a Supply and a Sink

As soon as the knowledge pipeline is examined, customers can adjust the knowledge supply within the code, so it connects to a Kinesis Knowledge Circulation, which ingests the streaming records that will have to be processed.

Deploying a Flink Utility to Kinesis Knowledge Analytics

Kinesis Knowledge Analytics creates jobs to run Flink packages. The roles search for a compiled supply in an S3 bucket. Subsequently, as soon as the appliance is compiled and packaged, customers will have to create an utility in Kinesis Knowledge Analytics configuring the next elements:

- Enter: Customers map the streaming supply to an in-application records circulation, and the knowledge flows from quite a lot of records resources and into the in-application records circulation.

- Utility code: This part is composed of the site of an S3 bucket that has the compiled Flink utility. The applying reads from an in-application records circulation related to a streaming supply, then writes to an in-application records circulation related to output.

- Output: This element is composed of a number of in-application streams that retailer intermediate effects. Customers can then optionally configure the appliance’s output to persist records from explicit in-application streams and ship it to an exterior vacation spot.

The usage of Process Introduction to Run the Kinesis Knowledge Analytics Utility

Customers can run an utility through opting for “Run” at the utility’s web page discovered within the AWS console. When the customers run a Kinesis Knowledge Analytics utility, the Kinesis Knowledge Analytics provider creates an Apache Flink task. The Process Supervisor manages the task’s execution and the assets it makes use of. It additionally divides the implementation of the appliance into duties, and a role supervisor, in flip, oversees each and every process. Customers observe utility efficiency through inspecting the efficiency of each and every process supervisor or task supervisor.

Growing Flink Utility Interactively with Notebooks

Customers can make use of notebooks, a device generally present in records science duties, to creator Flink packages. A pocket book is outlined as a web based interactive building atmosphere utilized by Knowledge scientists to write down and execute code and visualize effects. Studio notebooks will also be created within the AWS Control Console.

All About Kinesis Video Streams (KVS)

The Kinesis Video Circulation is outlined as a completely controlled provider used to:

- Attach and circulation audio, video, and different time-encoded records from other shooting units. This procedure makes use of an infrastructure provisioned dynamically throughout the AWS Cloud.

- Construct packages that serve as on reside records streams the usage of the ingested records frame-by-frame and in real-time for low-latency processing.

- Securely and durably retailer any media records for a default retention duration of at some point and a most of 10 years.

- Create advert hoc or batch packages that serve as on durably persevered records with out strict latency necessities.

The Key Ideas: Manufacturer, Shopper, and Kinesis Video Circulation

The provider is constructed across the thought of a manufacturer sending the streaming records to a circulation, then a client utility studying the transmitted records from the circulation. The manager ideas are:

- Manufacturer: This will also be any video-generating tool, like a safety digital camera, a body-worn digital camera, a smartphone digital camera, or a dashboard digital camera. Manufacturers too can ship non-video records, akin to pictures, audio feeds, or RADAR records. Mainly, that is any supply that places records into the video circulation.

- Kinesis Video Circulation: This useful resource transports reside video records, optionally shops it, then makes the tips to be had for intake in real-time and on an advert hoc or batch foundation.

- Shopper: The shopper is an utility that reads fragments and frames from the KVS for viewing, processing, or research.

Making a Kinesis Video Circulation

Customers can create a Kinesis Video Circulation with the AWS Admin Console.

Sending Media Knowledge to a Kinesis Video Circulation

Subsequent, customers will have to configure a manufacturer to position the knowledge into the Kinesis Video Circulation. The manufacturer employs a Kinesis Video Streams Manufacturer SDK that attracts the video records (within the type of frames) from media resources and uploads it to the KVS. This motion looks after each and every underlying process required to bundle the fragments and frames created through the tool’s media pipeline. The SDK too can take care of token rotation for protected and uninterrupted streaming, circulation introduction, processing acknowledgments returned through Kinesis Video Streams, and different jobs.

Eating Media Knowledge from a Kinesis Video Circulation

In any case, customers eat the media records through viewing it in an AWS Kinesis Video Circulation console or through development an utility that may learn the media records from a Kinesis Video Circulation. The Kinesis Video Circulation Parser Library is a toolset utilized in Java packages to eat the MKV records from a Kinesis Video Circulation.

Apache Kafka vs AWS Kinesis

Each Kafka and Kinesis are designed to ingest and procedure more than one large-scale records streams of information from disparate and versatile resources. Each platforms change conventional message agents through drinking wide records streams that will have to be processed and brought to other products and services and packages.

Essentially the most vital distinction between Kinesis and Kafka is that the latter is a controlled provider that wishes best minimum setup and configuration. Alternatively, Kafka is an open-source resolution that calls for numerous funding and data to configure, generally requiring setup occasions that pass on for weeks, no longer hours.

Amazon Kinesis makes use of important ideas akin to Knowledge Manufacturers, Knowledge Customers, Knowledge Data, Knowledge Streams, Shards, Partition Key, and Series Numbers. As we’ve already noticed, it is composed of 4 specialised products and services.

Kafka, on the other hand, makes use of Agents, Customers, Data, Manufacturers, Logs, Walls, Subjects, and Clusters. Moreover, Kafka is composed of 5 core APIs:

- The Manufacturer API lets in packages to ship records streams to subjects within the Kafka cluster.

- The Shopper API we could packages learn records streams from subjects within the Kafka cluster.

- The Streams API transforms records streams from enter subjects to output subjects.

- The Attach API lets in imposing connectors to repeatedly pull from some supply gadget or utility into Kafka or to push from Kafka into some sink gadget or utility.

- The AdminClient API allows managing and examining agents, subjects, and different Kafka-related items.

Additionally, Kafka gives SDK improve for Java, whilst Kinesis helps Android, Java, Pass, and .Internet, in addition to others. Kafka is extra versatile, permitting customers higher keep an eye on over configuration main points. On the other hand, Kinesis’s stress is a function as a result of that inflexibility method standardized configuration, which in flip is liable for the speedy setup time.

SQS vs. SNS vs. Kinesis

Right here’s a handy guide a rough comparability between SQS (Amazon Easy Queue Carrier), SNS (Amazon Easy Notification Carrier), and Kinesis:

SQS |

SNS |

Kinesis |

|

Customers pull the knowledge.

|

Pushes records to many subscribers. |

Customers pull records.

|

|

Knowledge will get deleted after it’s fed on. |

You’ll have as much as 10,000,000 subscribers. |

You’ll have as many shoppers as you want. |

|

You’ll have as many employees (or shoppers) as you want.

|

Knowledge isn’t persevered, that means it’s misplaced if no longer deleted.

|

It’s imaginable to replay records. |

|

There’s no want to provision throughput.

|

It has as much as 10,000,000 subjects.

|

It’s intended for real-time giant records, analytics, and ETL. |

|

No ordering ensure, except for referring to FIFO queues

|

There’s no want to provision throughput. |

The information expires after a undeniable selection of days. |

|

Person message prolong

|

It integrates with SQS for a fan-out structure development. |

You will have to provision throughput. |

Our Knowledge Engineering PG program is delivered by means of reside classes, trade initiatives, masterclasses, IBM hackathons, and Ask Me Anything else classes and so a lot more. If you want to advance your records engineering profession, join immediately!

Do You Need to Grow to be a Knowledge Engineer?

If the chance of operating with records in techniques like what we now have proven above pursuits you, it’s possible you’ll revel in a profession in records engineering! Simplilearn gives a Publish Graduate Program in Knowledge Engineering, held in partnership with Purdue College and in collaboration with IBM, that can assist you grasp the entire an important records engineering abilities.

This bootcamp is highest for execs, protecting crucial subjects just like the Hadoop framework, Knowledge Processing the usage of Spark, Knowledge Pipelines with Kafka, Giant Knowledge on AWS, and Azure cloud infrastructures. Simplilearn delivers this program by means of reside classes, trade initiatives, masterclasses, IBM hackathons, and Ask Me Anything else classes.

Glassdoor stories {that a} Knowledge Engineer in the USA might earn a annually moderate of $117,476.

So, discuss with Simplilearn as of late and get started a difficult but rewarding new profession!

supply: www.simplilearn.com