Thank you to special knowledge, Hadoop has transform a well-known time period and has discovered its prominence in these days’s virtual international. When someone can generate huge quantities of knowledge with only one click on, the Hadoop framework is important. Have you ever ever questioned what Hadoop is and what all of the fuss is set? This text will provide you with the solutions! You are going to be told all about Hadoop and its dating with Giant Information.

What’s Hadoop?

Hadoop is a framework that makes use of allotted garage and parallel processing to retailer and set up large knowledge. It’s the device maximum utilized by knowledge analysts to deal with large knowledge, and its market size continues to grow. There are 3 elements of Hadoop:

- Hadoop HDFS – Hadoop Dispensed Record Machine (HDFS) is the garage unit.

- Hadoop MapReduce – Hadoop MapReduce is the processing unit.

- Hadoop YARN – But Every other Useful resource Negotiator (YARN) is a useful resource control unit.

Hadoop Thru an Analogy

Earlier than leaping into the technicalities of Hadoop, and serving to you realize what’s Hadoop, allow us to perceive Hadoop via a captivating tale. Through the top of this tale, you are going to comprehend Hadoop, Giant Information, and the need for Hadoop.

Introducing Jack, a grape farmer. He harvests the grapes within the fall, shops them in a garage room, and in spite of everything sells them within the within sight the town. He saved this routing going for years till other folks started to call for different culmination. This upward thrust in call for ended in him rising apples and oranges, along with grapes.

Sadly, the entire procedure became out to be time-consuming and hard for Jack to do single-handedly.

So, Jack hires two extra other folks to paintings along him. The additional assist hurries up the harvesting procedure as 3 of them can paintings concurrently on other merchandise.

Alternatively, this takes an uncongenial toll at the garage room, because the garage house turns into a bottleneck for storing and getting access to all of the culmination.

Jack idea via this downside and got here up with an answer: give each and every one separate cupboard space. So, when Jack receives an order for a fruit basket, he can whole the order on time as all 3 can paintings with their garage house.

Because of Jack’s resolution, everybody can end their order on time and and not using a bother. Even with sky-high calls for, Jack can whole his orders.

Learn Extra: Simplilearn Giant Information Route Evaluation main points Md Azhar Hussain adventure from a number one area controller (PDC) to pleasant his dream of changing into a Giant Information Architect. Learn how our Giant Information Hadoop and Spark Developer path helped make his dream come true.

The Upward thrust of Giant Information

So, now you may well be questioning how Jack’s tale is said to Giant Information and Hadoop. Let’s draw a comparability between Jack’s tale and Giant Information.

Again within the day, there used to be restricted knowledge era. Therefore, storing and processing knowledge used to be finished with a unmarried garage unit and a processor, respectively. Within the blink of a watch, knowledge era will increase via leaps and boundaries. No longer most effective did it building up in quantity but in addition its selection. Subsequently, a unmarried processor used to be incapable of processing excessive volumes of various sorts of knowledge. Talking of sorts of knowledge, you’ll have structured, semi-structured and unstructured knowledge.

This chart is comparable to how Jack discovered it exhausting to reap various kinds of culmination single-handedly. Thus, similar to Jack’s way, analysts wanted a couple of processors to procedure quite a lot of knowledge sorts.

More than one machines assist procedure knowledge parallelly. Alternatively, the garage unit turned into a bottleneck leading to a community overhead era

To handle this factor, the garage unit is shipped among each and every of the processors. The distribution led to storing and getting access to knowledge successfully and and not using a community overheads. As observed under, this technique is named parallel processing with allotted garage.

This setup is how knowledge engineers and analysts set up large knowledge successfully. Now, do you spot the relationship between Jack’s tale and large knowledge control?

Giant Information and Its Demanding situations

Giant Information refers back to the huge quantity of knowledge that can not be saved, processed, and analyzed the usage of conventional tactics.

The primary parts of Giant Information are:

- Quantity – There’s a huge quantity of knowledge generated each and every 2nd.

- Pace – The velocity at which knowledge is generated, accrued, and analyzed

- Selection – The various kinds of knowledge: structured, semi-structured, unstructured

- Price – The facility to show knowledge into helpful insights for your corporation

- Veracity – Trustworthiness with regards to high quality and accuracy

The primary demanding situations that Giant Information confronted and the answers for each and every are indexed under:

|

Demanding situations |

Resolution |

|

Unmarried central garage |

Dispensed garage |

|

Serial processing

|

Parallel processing

|

|

Loss of skill to procedure unstructured knowledge |

Talent to procedure each and every form of knowledge |

Shall we, perceive what’s Hadoop in main points

Why Is Hadoop Essential?

Hadoop is a really helpful generation for knowledge analysts. There are lots of very important options in Hadoop which make it so vital and user-friendly.

- The gadget is in a position to retailer and procedure huge quantities of knowledge at a particularly rapid charge. A semi-structured, structured and unstructured knowledge set can vary relying on how the information is structured.

- Complements operational decision-making and batch workloads for ancient research via supporting real-time analytics.

- Information will also be saved via organisations, and it may be filtered for particular analytical makes use of via processors as wanted.

- Numerous nodes will also be added to Hadoop as it’s scalable, so organisations will be capable to select up extra knowledge.

- A coverage mechanism prevents packages and knowledge processing from being harmed via {hardware} screw ups. Nodes which might be down are routinely redirected to different nodes, permitting packages to run with out interruption.

In accordance with the above causes, we will say that Hadoop is vital.

Who Makes use of Hadoop?

Hadoop is a well-liked large knowledge instrument, utilized by many firms international. Right here’s a short lived pattern of a success Hadoop customers:

- British Airlines

- Uber

- The Financial institution of Scotland

- Netflix

- The Nationwide Safety Company (NSA), of the USA

- The United Kingdom’s Royal Mail gadget

- Expedia

Now that we have got some thought of Hadoop’s recognition, it’s time for a more in-depth have a look at its elements to realize an figuring out of what’s Hadoop.

Elements of Hadoop

Hadoop is a framework that makes use of allotted garage and parallel processing to retailer and set up Giant Information. It’s the maximum often used device to deal with Giant Information. There are 3 elements of Hadoop.

- Hadoop HDFS – Hadoop Dispensed Record Machine (HDFS) is the garage unit of Hadoop.

- Hadoop MapReduce – Hadoop MapReduce is the processing unit of Hadoop.

- Hadoop YARN – Hadoop YARN is a useful resource control unit of Hadoop.

Allow us to take an in depth have a look at Hadoop HDFS on this a part of the What’s Hadoop article.

Hadoop HDFS

Information is saved in a allotted approach in HDFS. There are two elements of HDFS – title node and knowledge node. Whilst there is just one title node, there will also be a couple of knowledge nodes.

HDFS is specifically designed for storing massive datasets in commodity {hardware}. An undertaking model of a server prices kind of $10,000 in keeping with terabyte for the overall processor. In case you want to shop for 100 of those undertaking model servers, it’ll pass as much as 1,000,000 bucks.

Hadoop lets you use commodity machines as your knowledge nodes. This manner, you don’t must spend thousands and thousands of bucks simply for your knowledge nodes. Alternatively, the title node is all the time an undertaking server.

Options of HDFS

- Supplies allotted garage

- Can also be applied on commodity {hardware}

- Supplies knowledge safety

- Extremely fault-tolerant – If one gadget is going down, the information from that gadget is going to the following gadget

Grasp and Slave Nodes

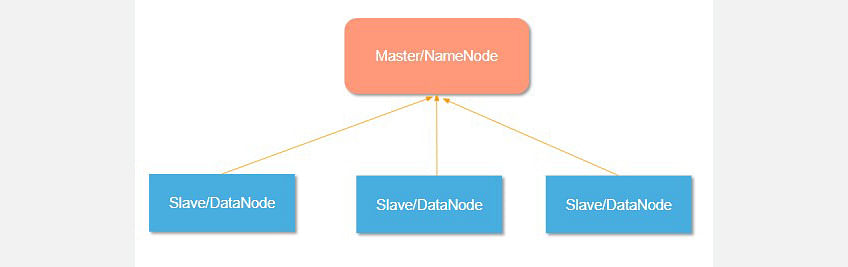

Grasp and slave nodes shape the HDFS cluster. The title node is named the grasp, and the information nodes are referred to as the slaves.

The title node is accountable for the workings of the information nodes. It additionally shops the metadata.

The knowledge nodes learn, write, procedure, and reflect the information. Additionally they ship indicators, referred to as heartbeats, to the title node. Those heartbeats display the standing of the information node.

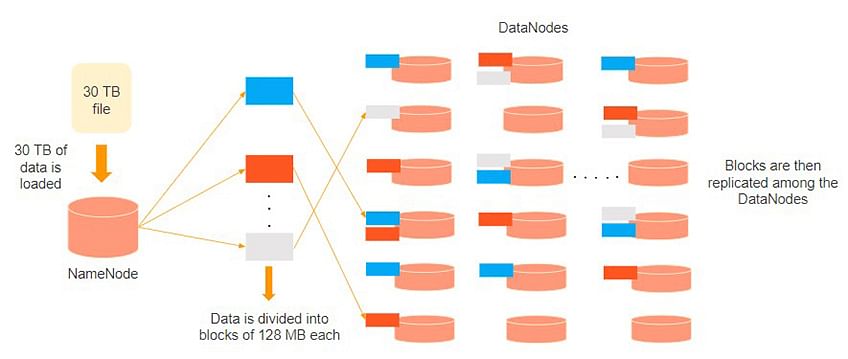

Believe that 30TB of knowledge is loaded into the title node. The title node distributes it around the knowledge nodes, and this information is replicated a few of the knowledge notes. You’ll be able to see within the symbol above that the blue, gray, and purple knowledge are replicated a few of the 3 knowledge nodes.

Replication of the information is carried out thrice via default. It’s finished this fashion, so if a commodity gadget fails, you’ll change it with a brand new gadget that has the similar knowledge.

Allow us to focal point on Hadoop MapReduce within the following phase of the What’s Hadoop article.

Hadoop MapReduce

Hadoop MapReduce is the processing unit of Hadoop. Within the MapReduce way, the processing is completed on the slave nodes, and the general result’s despatched to the grasp node.

A knowledge containing code is used to procedure all of the knowledge. This coded knowledge is normally very small compared to the information itself. You most effective wish to ship a couple of kilobytes value of code to accomplish a heavy-duty procedure on computer systems.

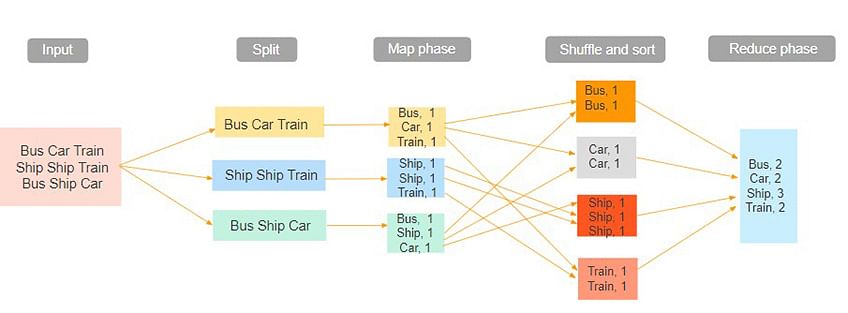

The enter dataset is first break up into chunks of knowledge. On this instance, the enter has 3 traces of textual content with 3 separate entities – “bus automobile teach,” “send send teach,” “bus send automobile.” The dataset is then break up into 3 chunks, in accordance with those entities, and processed parallelly.

Within the map section, the information is assigned a key and a worth of one. On this case, we’ve got one bus, one automobile, one send, and one teach.

Those key-value pairs are then shuffled and taken care of in combination in accordance with their keys. On the scale back section, the aggregation takes position, and the general output is got.

Hadoop YARN is the following thought we will focal point on within the What’s Hadoop article.

Hadoop YARN

Hadoop YARN stands for But Every other Useful resource Negotiator. It’s the useful resource control unit of Hadoop and is to be had as an element of Hadoop model 2.

- Hadoop YARN acts like an OS to Hadoop. This is a dossier gadget this is constructed on most sensible of HDFS.

- It’s accountable for managing cluster assets to remember to do not overload one gadget.

- It plays task scheduling to make certain that the roles are scheduled in the best position

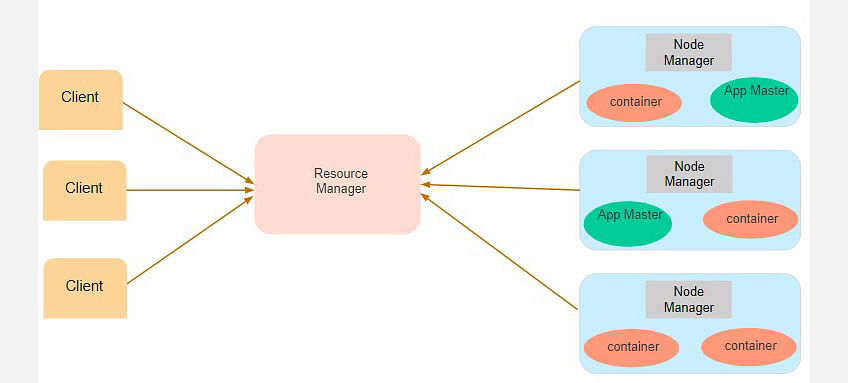

Think a consumer gadget needs to do a question or fetch some code for knowledge research. This task request is going to the useful resource supervisor (Hadoop Yarn), which is accountable for useful resource allocation and control.

Within the node phase, each and every of the nodes has its node managers. Those node managers set up the nodes and observe the useful resource utilization within the node. The packing containers comprise a choice of bodily assets, which might be RAM, CPU, or exhausting drives. On every occasion a role request is available in, the app grasp requests the container from the node supervisor. As soon as the node supervisor will get the useful resource, it is going again to the Useful resource Supervisor.

How Does Hadoop Paintings?

The main serve as of Hadoop is to procedure the information in an organised approach a few of the cluster of commodity device. The customer must put up the information or program that must be processed. Hadoop HDFS shops the information. YARN, MapReduce divides the assets and assigns the duties to the information. Let’s know the operating of Hadoop intimately.

- The customer enter knowledge is split into 128 MB blocks via HDFS. Blocks are replicated in step with the replication issue: quite a lot of DataNodes space the unions and their duplicates.

- The person can procedure the information as soon as all blocks had been placed on HDFS DataNodes.

- The customer sends Hadoop the MapReduce programme to procedure the information.

- The user-submitted device used to be then scheduled via ResourceManager on explicit cluster nodes.

- The output is written again to the HDFS as soon as processing has been finished via all nodes.

Hadoop Dispensed Record Machine:

HDFS is referred to as the Hadoop allotted dossier gadget. It’s the allotted Record Machine. It’s the number one knowledge garage gadget in Hadoop Programs. It’s the garage gadget of Hadoop this is unfold far and wide the gadget. In HDFS, the information is as soon as written at the server, and it’ll regularly be used again and again in step with the desire. The goals of HDFS are as follows.

- The facility to recuperate from {hardware} screw ups in a well timed approach

- Get right of entry to to Streaming Information

- Lodging of Massive knowledge units

- Portability

Hadoop Dispensed Record Machine has two nodes incorporated in it. They’re the Identify Node and Information Node.

Identify Node:

Identify Node is the principle element of HDFS. Identify Node maintains the dossier methods along side namespaces. Exact knowledge can’t be saved within the Identify Node. The changed knowledge, comparable to Metadata, block knowledge and many others., will also be saved right here.

Information Node:

Information Node follows the directions given via the Identify Node. Information Nodes are often referred to as ‘slave Nodes’. Those nodes retailer the true knowledge supplied via the buyer and easily practice the instructions of the Identify Node.

Activity Tracker:

The main serve as of the Activity Tracker is useful resource control. Activity Tracker determines the positioning of the information via speaking with the Identify Node. Activity Tracker additionally is helping find the Process Tracker. It additionally tracks the MapReduce from Native Node to Slave Node. In Hadoop, there is just one example of Activity Trackers. Activity Tracker screens the person Process Tracker and tracks the standing. Activity Tracker additionally is helping within the execution of MapReduce in Hadoop.

Process Tracker:

Process Tracker is the slave daemon within the cluster which accepts all of the directions from the Activity Tracker. Process Tracker runs on its procedure. The duty trackers observe all of the duties via shooting the enter and output codes. The Process Tracker is helping in mapping, shuffling and lowering the information operations. Process Tracker arranges other slots to accomplish other duties. Process Tracker regularly updates the standing of the Activity Tracker. It additionally informs in regards to the collection of slots to be had within the cluster. In case the Process Tracker is unresponsive, then Activity Tracker assigns the paintings to a couple different nodes.

How Hadoop Improves on Conventional Databases

Figuring out what’s Hadoop calls for additional figuring out on the way it differs from conventional databases.

Hadoop makes use of the HDFS (Hadoop Information Record Machine) to divide the large knowledge quantities into manageable smaller items, then stored on clusters of neighborhood servers. This gives scalability and economic system.

Moreover, Hadoop employs MapReduce to run parallel processings, which each shops and retrieves knowledge quicker than data dwelling on a conventional database. Conventional databases are nice for dealing with predictable and loyal workflows; another way, you want Hadoop’s energy of scalable infrastructure.

5 Benefits of Hadoop for Giant Information

Hadoop used to be created to care for large knowledge, so it’s hardly ever unexpected that it provides such a lot of advantages. The 5 primary advantages are:

- Velocity. Hadoop’s concurrent processing, MapReduce fashion, and HDFS shall we customers run complicated queries in only a few seconds.

- Variety. Hadoop’s HDFS can retailer other knowledge codecs, like structured, semi-structured, and unstructured.

- Value-Efficient. Hadoop is an open-source knowledge framework.

- Resilient. Information saved in a node is replicated in different cluster nodes, making sure fault tolerance.

- Scalable. Since Hadoop purposes in a allotted setting, you’ll simply upload extra servers.

How Is Hadoop Being Used?

Hadoop is being utilized in other sectors thus far. The next sectors have using Hadoop.

1. Monetary Sectors:

Hadoop is used to hit upon fraud within the monetary sector. Hadoop may be used to analyse fraud patterns. Bank card firms additionally use Hadoop to determine the precise shoppers for his or her merchandise.

2. Healthcare Sectors:

Hadoop is used to analyse massive knowledge comparable to scientific gadgets, scientific knowledge, scientific stories and many others. Hadoop analyses and scans the stories totally to scale back the guide paintings.

3. Hadoop Programs within the Retail Trade:

Outlets use Hadoop to beef up their gross sales. Hadoop additionally helped in monitoring the goods purchased via the shoppers. Hadoop additionally is helping shops to are expecting the associated fee vary of the goods. Hadoop additionally is helping shops to make their merchandise on-line. Those benefits of Hadoop assist the retail trade so much.

4. Safety and Legislation Enforcement:

The Nationwide Safety Company of america makes use of Hadoop to forestall terrorist assaults. Information gear are utilized by the police officers to chase criminals and are expecting their plans. Hadoop may be utilized in defence, cybersecurity and many others.

5. Hadoop Makes use of in Commercials:

Hadoop may be used within the commercial sector too. Hadoop is used for shooting video, analysing transactions and dealing with social media platforms. The knowledge analysed is generated via social media platforms like Fb, Instagram and many others. Hadoop may be used within the promotion of the goods.

There are lots of extra benefits of Hadoop in day-to-day lifestyles in addition to within the Tool sector too.

Hadoop Use Case

On this case find out about, we can talk about how Hadoop can struggle fraudulent actions. Allow us to have a look at the case of Zions Bancorporation. Their primary problem used to be in use the Zions safety group’s approaches to struggle fraudulent actions happening. The issue used to be that they used an RDBMS dataset, which used to be not able to retailer and analyze massive quantities of knowledge.

In different phrases, they have been most effective ready to research small quantities of knowledge. However with a flood of shoppers coming in, there have been such a lot of issues they couldn’t stay monitor of, which left them at risk of fraudulent actions

They started to make use of parallel processing. Alternatively, the information used to be unstructured, and inspecting it used to be no longer conceivable. No longer most effective did they have got an enormous quantity of knowledge that would no longer get into their databases, however in addition they had unstructured knowledge.

Hadoop enabled the Zions’ group to drag all that vast quantities of knowledge in combination and retailer it in a single position. It additionally turned into conceivable to procedure and analyze the large quantities of unstructured knowledge that they’d. It used to be extra time-efficient, and the in-depth research of quite a lot of knowledge codecs turned into more straightforward via Hadoop. Zions’ group may now hit upon the whole thing from malware, spears, and phishing makes an attempt to account takeovers.

Demanding situations of The use of Hadoop

In spite of Hadoop’s awesomeness, it’s no longer all hearts and plant life. Hadoop comes with its personal problems, comparable to:

- There’s a steep finding out curve. If you wish to run a question in Hadoop’s dossier gadget, you want to put in writing MapReduce purposes with Java, a procedure this is non-intuitive. Additionally, the ecosystem is made up of quite a lot of elements.

- No longer each and every dataset will also be treated the similar. Hadoop doesn’t come up with a “one measurement suits all” merit. Other elements run issues another way, and you want to kind them out with enjoy.

- MapReduce is restricted. Sure, it’s an ideal programming fashion, however MapReduce makes use of a file-intensive way that isn’t ultimate for real-time interactive iterative duties or knowledge analytics.

- Safety is a matter. There’s numerous knowledge available in the market, and far of it’s delicate. Hadoop nonetheless wishes to include the right kind authentication, knowledge encryption, provisioning, and common auditing practices..

Were given a transparent figuring out of what’s Hadoop? Take a look at what you must do subsequent.

Taking a look ahead to changing into a Hadoop Developer? Take a look at the Hadoop Certification Coaching Route and get qualified these days.

Conclusion

Hadoop is a extensively used Giant Information generation for storing, processing, and inspecting massive datasets. After studying this newsletter on what’s Hadoop, you might have understood how Giant Information developed and the demanding situations it introduced with it. You understood the fundamentals of Hadoop, its elements, and the way they paintings. Do you could have any questions comparable to what’s Hadoop article? If in case you have, then please put it within the feedback phase of this newsletter. Our group will allow you to clear up your queries.

If you wish to develop your profession in Giant Information and Hadoop, then you’ll take a look at Giant Information Engineer Route.

supply: www.simplilearn.com