Device finding out fashions are the mathematical engines that pressure Synthetic Intelligence and thus are extremely important for a hit AI implementation. In truth, it’s worthwhile to say that your AI is simplest as just right because the gadget fashions that pressure them.

So, now satisfied of the significance of a just right gadget finding out type, you follow your self to the duty, and after some arduous paintings, you in spite of everything create what you imagine to be a really perfect gadget finding out type. Congratulations!

However wait. How are you able to inform in case your gadget finding out type is as just right as you imagine it’s? Obviously, you wish to have an function method of measuring your gadget finding out type’s efficiency and figuring out if it’s just right sufficient for implementation. It might assist should you had a ROC curve.

This newsletter has the whole lot you wish to have to learn about ROC curves. We will be able to outline ROC curves and the time period “space beneath the ROC curve,” find out how to use ROC curves in efficiency modeling, and a wealth of alternative precious data. We start with some definitions.

What Is a ROC Curve?

A ROC (which stands for “receiver running feature”) curve is a graph that presentations a classification type efficiency in any respect classification thresholds. This can be a chance curve that plots two parameters, the True Sure Charge (TPR) towards the False Sure Charge (FPR), at other threshold values and separates a so-called ‘sign’ from the ‘noise.’

The ROC curve plots the True Sure Charge towards the False Sure Charge at other classification thresholds. If the consumer lowers the classification threshold, extra pieces get categorized as sure, which will increase each the False Positives and True Positives. You’ll be able to see some imagery referring to this right here.

ROC Curve

An ROC (Receiver Running Feature) curve is a graphical illustration used to judge the efficiency of a binary classifier. It plots two key metrics:

- True Sure Charge (TPR): Sometimes called sensitivity or recall, it measures the percentage of tangible positives as it should be known by means of the type. It’s calculated as:

TPR=True Positives/(TP)True Positives (TP)+False Negatives - False Sure Charge (FPR): This measures the percentage of tangible negatives incorrectly known as positives by means of the type. It’s calculated as:

FPR=False Positives (FP)/False Positives (FP)+True Negatives (TN)

The ROC curve plots TPR (y-axis) towards FPR (x-axis) at more than a few threshold settings. Here is a extra detailed rationalization of those metrics:

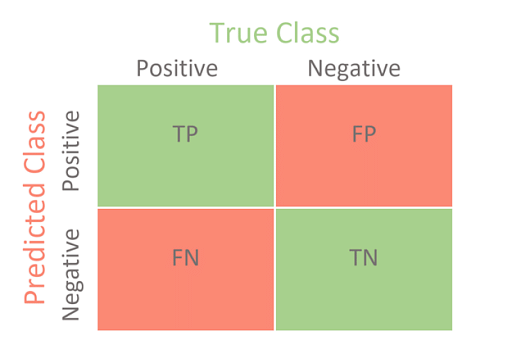

- True Sure (TP): The example is sure, and the type as it should be classifies it as sure.

- False Sure (FP): The example is unfavorable, however the type incorrectly classifies it as sure.

- True Unfavourable (TN): The example is unfavorable, and the type as it should be classifies it as unfavorable.

- False Unfavourable (FN): The example is sure, however the type incorrectly classifies it as unfavorable.

Deciphering the ROC Curve

- A curve nearer to the highest left nook signifies a better-performing type.

- The diagonal line (from (0,0) to (1,1)) represents a random classifier.

- The world beneath the ROC curve (AUC) is a unmarried scalar worth that measures the type’s total efficiency. It levels from 0 to at least one, and the next AUC signifies a better-performing type.

House Beneath the ROC Curve (AUC)

The House Beneath the ROC Curve (AUC) is a unmarried scalar worth that summarizes the entire efficiency of a binary classification type. It measures the facility of the type to differentiate between the sure and unfavorable categories. Here is what you wish to have to learn about AUC:

Key Issues About AUC

Vary of AUC:

- The AUC worth levels from 0 to at least one.

- An AUC of 0.5 signifies a type that plays no larger than random likelihood.

- An AUC nearer to at least one signifies a type with superb efficiency.

Interpretation of AUC Values:

- 0.9 – 1.0: Very good

- 0.8 – 0.9: Excellent

- 0.7 – 0.8: Honest

- 0.6 – 0.7: Deficient

- 0.5 – 0.6: Fail

Benefits of The usage of AUC:

- Threshold Unbiased: AUC evaluates the type’s efficiency throughout all imaginable classification thresholds.

- Scale Invariant: AUC measures how neatly the predictions are ranked somewhat than their absolute values.

Calculation of AUC:

- AUC is usually calculated the use of numerical integration strategies, such because the trapezoidal rule, implemented to the ROC curve.

- In sensible phrases, libraries like Scikit-learn in Python supply purposes to compute AUC immediately from type predictions and true labels.

Key Phrases Utilized in AUC and ROC Curve

1. True Sure (TP)

- Definition: The selection of sure circumstances as it should be known by means of the type.

- Instance: In a scientific take a look at, a TP is when the take a look at as it should be identifies an individual with a illness as having the illness.

2. True Unfavourable (TN)

- Definition: The selection of unfavorable circumstances as it should be known by means of the type.

- Instance: In a junk mail clear out, a TN is when a valid electronic mail is as it should be known as no longer junk mail.

3. False Sure (FP)

- Definition: The selection of unfavorable circumstances incorrectly known as sure by means of the type.

- Instance: In a fraud detection gadget, an FP is when a valid transaction is incorrectly flagged as fraudulent.

4. False Unfavourable (FN)

- Definition: The selection of sure circumstances incorrectly known as unfavorable by means of the type.

- Instance: In a most cancers screening take a look at, an FN is when an individual with most cancers is incorrectly known as no longer having most cancers.

5. True Sure Charge (TPR)

- Definition: Sometimes called sensitivity or recall, it measures the percentage of tangible positives which might be as it should be known by means of the type.

- Method: TPR=TP/TP+FN

- Instance: A TPR of 0.8 method 80% of tangible sure instances are as it should be known.

6. False Sure Charge (FPR)

- Definition: It measures the percentage of tangible negatives which might be incorrectly known as sure by means of the type.

- Method: FPR=FP/FP+TN

- Instance: An FPR of 0.1 method 10% of tangible unfavorable instances are incorrectly known.

7. Threshold

- Definition: The price at which the type’s prediction is transformed right into a binary classification. Via adjusting the brink, other TPR and FPR values can also be received.

- Instance: In a binary classification drawback, a threshold of 0.5 may imply that predicted possibilities above 0.5 are categorized as sure.

8. ROC Curve

- Definition: A graphical plot that illustrates the diagnostic skill of a binary classifier as its discrimination threshold is numerous. It plots TPR towards FPR at more than a few threshold settings.

- Instance: An ROC curve as regards to the highest left nook signifies a better-performing type.

9. House Beneath the Curve (AUC)

- Definition: A unmarried scalar worth that summarizes the entire efficiency of a binary classifier throughout all imaginable thresholds. It’s the space beneath the ROC curve.

- Vary: 0 to at least one, the place 1 signifies best efficiency and nil.5 signifies no larger than random guessing.

- Instance: An AUC of 0.9 signifies superb efficiency.

10. Precision

- Definition: The share of sure identifications which might be in fact proper.

- Method: Precision=TP/TP+FP

- Instance: A precision of 0.75 method 75% of the circumstances categorized as sure are in fact sure.

11. Recall (Sensitivity)

- Definition: Some other time period for True Sure Charge (TPR), measuring the percentage of tangible positives as it should be known.

- Instance: A recall of 0.8 method 80% of tangible sure instances are as it should be known.

12. Specificity

- Definition: The share of tangible negatives as it should be known by means of the type.

- Method: Specificity=TN/TN+FP

- Instance: A specificity of 0.9 method 90% of tangible unfavorable instances are as it should be known.

What Is a ROC Curve: How Do You Speculate Style Efficiency?

AUC is a precious device for speculating type efficiency. A very good type has its AUC as regards to 1, indicating a just right separability measure. As a result, a deficient type’s AUC leans nearer to 0, appearing the worst separability measure. In truth, the proximity to 0 method it reciprocates the end result, predicting the unfavorable category as sure and vice versa, appearing 0s as 1s and 1s as 0s. After all, if the AUC is 0.5, it presentations that the type has no category separation capability in any respect.

So, when we’ve a zero.5<AUC<1 consequence, there’s a excessive chance that the classifier can distinguish between the sure category values and the unfavorable category values. That’s since the classifier can locate extra numbers of True Positives and Negatives as an alternative of False Negatives and Positives.

The Relation Between Sensitivity, Specificity, FPR, and Threshold

Sooner than we read about the relation between Specificity, FPR, Sensitivity, and Threshold, we will have to first duvet their definitions within the context of gadget finding out fashions. For that, we’re going to want a confusion matrix to assist us to know the phrases larger. This is an instance of a confusion matrix:

TP stands for True Sure, and TN method True Unfavourable. FP stands for False Sure, and FN method False Unfavourable.

- Sensitivity: Sensitivity, additionally termed “recall,” is the metric that presentations a type’s skill to expect the real positives of all to be had classes. It presentations what quantity of the sure category was once categorized as it should be. For instance, when attempting to determine what number of people have the flu, sensitivity, or True Sure Charge, measures the percentage of people that have the flu and had been as it should be predicted as having it.

Right here’s find out how to mathematically calculate sensitivity:

Sensitivity = (True Sure)/(True Sure + False Unfavourable)

- Specificity: The specificity metric Specificity evaluates a type’s skill to expect true negatives of all to be had classes. It presentations what quantity of the unfavorable category was once categorized as it should be. For instance, specificity measures the percentage of people that shouldn’t have the flu and had been as it should be predicted as no longer affected by it in our flu situation.

Right here’s find out how to calculate specificity:

Specificity = (True Unfavourable)/(True Unfavourable + False Sure)

- FPR: FPR stands for False Sure Charge and presentations what quantity of the unfavorable category was once incorrectly categorized. This formulation presentations how we calculate FPR:

FPR= 1 – Specificity

- Threshold: The brink is the desired cut-off level for an statement to be categorized as both 0 or 1. Generally, an 0.5 is used because the default threshold, even supposing it’s no longer at all times assumed to be the case.

Sensitivity and specificity are inversely proportional, so if we spice up sensitivity, specificity drops, and vice versa. Moreover, we internet extra sure values after we lower the brink, thereby elevating the sensitivity and decreasing the specificity.

Alternatively, if we spice up the brink, we will be able to get extra unfavorable values, which ends up in upper specificity and decrease sensitivity.

And for the reason that FPR is 1 – specificity, after we build up TPR, the FPR additionally will increase and vice versa.

How AUC-ROC Works

The AUC-ROC (House Beneath the Curve – Receiver Running Feature) is a efficiency size for classification issues at more than a few threshold settings. Here is the way it works:

Threshold Variation:

- The ROC curve is generated by means of plotting the True Sure Charge (TPR) towards the False Sure Charge (FPR) at more than a few threshold ranges.

- Via various the brink, other pairs of TPR and FPR values are received.

Plotting the ROC Curve:

- True Sure Charge (TPR), often referred to as Sensitivity or Recall, is plotted at the y-axis. It’s the ratio of true positives to the sum of true positives and false negatives.

- False Sure Charge (FPR) is plotted at the x-axis. It’s the ratio of false positives to the sum of false positives and true negatives.

Calculating AUC:

- The world beneath the ROC curve (AUC) quantifies the entire skill of the type to discriminate between sure and unfavorable categories.

- An AUC worth levels from 0 to at least one. A price of 0.5 suggests no discrimination (random efficiency), whilst a price nearer to at least one signifies superb type efficiency.

When to Use the AUC-ROC Analysis Metric?

The AUC-ROC metric is especially helpful within the following situations:

- Binary Classification Issues: It’s basically used for binary classification duties with simplest two categories.

- Imbalanced Datasets: AUC-ROC is advisable when coping with imbalanced datasets, offering an combination efficiency measure throughout all imaginable classification thresholds.

- Style Comparability: It turns out to be useful for evaluating the efficiency of various fashions. The next AUC worth signifies a better-performing type.

- Threshold-Unbiased Analysis: When you wish to have a efficiency metric that doesn’t rely on deciding on a selected classification threshold.

Figuring out the AUC-ROC Curve

1. ROC Curve Interpretation

- Nearer to Most sensible Left Nook: A curve that hugs the highest left nook signifies a high-performing type with excessive TPR and coffee FPR.

- Diagonal Line: A curve alongside the diagonal line (from (0,0) to (1,1)) signifies a type with out a discrimination capacity, an identical to random guessing.

2. AUC Price Interpretation

- 0.9 – 1.0: Very good discrimination capacity.

- 0.8 – 0.9: Excellent discrimination capacity.

- 0.7 – 0.8: Honest discrimination capacity.

- 0.6 – 0.7: Deficient discrimination capacity.

- 0.5 – 0.6: Fail, type plays worse than random guessing.

Learn how to Use the AUC – ROC Curve for the Multi-Elegance Style

We will be able to use the One vs. ALL technique to plan the N selection of AUC ROC Curves for N quantity categories when the use of a multi-class type. One vs. ALL offers us a method to leverage binary classification. If in case you have a classification drawback with N imaginable answers, One vs. ALL supplies us with one binary classifier for every imaginable end result.

So, for instance, you have got 3 categories named 0, 1, and a pair of. You are going to have one ROC for 0 that’s categorized towards 1 and a pair of, some other ROC for 1, which is assessed towards 0 and a pair of, and in spite of everything, the 3rd certainly one of 2 categorized towards 0 and 1.

We will have to take a second and provide an explanation for the One vs. ALL technique to higher solution the query “what’s a ROC curve?”. This technique is made up of N separate binary classifiers. The type runs throughout the binary classifier series all through coaching, coaching every to respond to a classification query. As an example, in case you have a cat image, you’ll teach 4 other recognizers, one seeing the picture as a favorable instance (the cat) and the opposite 3 seeing a unfavorable instance (no longer the cat). It might seem like this:

- Is that this symbol a rutabaga? No

- Is that this symbol a cat? Sure

- Is that this symbol a canine? No

- Is that this symbol a hammer? No

This technique works neatly with a small selection of overall categories. Then again, because the selection of categories rises, the type turns into increasingly more inefficient.

Acelerate your occupation in AI and ML with the AI and Device Finding out Classes with Purdue College collaborated with IBM.

Are You Occupied with a Profession in Device Finding out?

There’s so much to be told about Device Finding out, as you’ll inform from this “what’s a ROC curve” article! Then again, each gadget finding out and synthetic intelligence are the waves of the longer term, so it’s price obtaining abilities and data in those fields. Who is aware of? You can find your self in a thrilling gadget finding out occupation!

If you need a occupation in gadget finding out, Simplilearn let you for your manner. The AI and ML Certification provides scholars an in-depth review of gadget finding out subjects. You are going to discover ways to increase algorithms the use of supervised and unsupervised finding out, paintings with real-time information, and know about ideas like regression, classification, and time collection modeling. You are going to additionally learn the way Python can be utilized to attract predictions from information. As well as, this system options 58 hours of implemented finding out, interactive labs, 4 hands-on tasks, and mentoring.

And because gadget finding out and synthetic intelligence paintings in combination so regularly, take a look at Simplilearn’s Synthetic Intelligence Engineer Grasp’s program, and canopy your entire bases.

In line with Glassdoor, Device Finding out Engineers in the US experience a mean annual base pay of $133,001. Payscale.com stories that Device Finding out Engineers in India can doubtlessly earn ₹732,566 a 12 months on moderate.

So consult with Simplilearn as of late, and discover the wealthy probabilities of a rewarding vocation within the gadget finding out box!

FAQs

1. What does a really perfect AUC-ROC curve seem like?

A really perfect AUC-ROC curve reaches the highest left nook of the plot, indicating a True Sure Charge (TPR) of one and a False Sure Charge (FPR) of 0 for some threshold. This implies the type completely distinguishes between sure and unfavorable categories, leading to an AUC worth of one.0.

2. What does an AUC worth of 0.5 characterize?

An AUC worth of 0.5 means that the type’s efficiency is not any larger than random guessing. It signifies that the type can not distinguish between sure and unfavorable categories, because the True Sure Charge (TPR) and False Sure Charge (FPR) are equivalent throughout all thresholds.

3. How do you evaluate ROC curves of various fashions?

To match ROC curves of various fashions, plot every type’s ROC curve at the identical graph and read about their shapes and positions. The type with the ROC curve closest to the highest left nook and the absolute best House Beneath the Curve (AUC) worth normally plays larger.

4. What are some barriers of the ROC curve?

Some barriers of the ROC curve come with:

- It may be much less informative for extremely imbalanced datasets, because the True Unfavourable Charge (specificity) may dominate the curve.

- It does no longer account for the price of false positives and false negatives, which can also be an important in some packages.

- Interpretation can also be much less intuitive in comparison to precision-recall curves in sure contexts.

5. What are commonplace metrics derived from ROC curves?

Not unusual metrics derived from ROC curves come with:

- True Sure Charge (TPR): Sometimes called sensitivity or recall, it measures the percentage of tangible positives as it should be known.

- False Sure Charge (FPR): Measures the percentage of tangible negatives incorrectly known as positives.

- House Beneath the Curve (AUC): Summarizes the type’s total efficiency throughout all thresholds.

- Optimum Threshold: The brink worth maximizes the TPR whilst minimizing the FPR.

supply: www.simplilearn.com